We’re running into NPE logged when attempting to set up a mapping to an s3 path included from within our Application.cfc:

<cfset this.mappings["/mappingname"] = variableRetrievedFromEnvVar>

With that variable set to s3://[KEY]:[SECRETKEY]@s3.amazonaws.com/[bucketname]/[folder]

This code has been working for years pointing to other buckets… including another one in the same account which I had just created, in the same application but for this bucket which I had just created and another bucket which had been created several years ago, I am getting this Null pointer Exception logged in Application log:

"ERROR","XNIO-2 task-3","06/13/2024","09:50:18","S3","java.lang.NullPointerException;java.lang.NullPointerException

at java.base/java.lang.reflect.Method.invoke(Unknown Source)

at org.lucee.extension.resource.s3.S3Properties.getApplicationData(S3Properties.java:184)

at org.lucee.extension.resource.s3.S3ResourceProvider.loadWithNewPattern(S3ResourceProvider.java:159)

at org.lucee.extension.resource.s3.S3ResourceProvider.getResource(S3ResourceProvider.java:102)

at lucee.commons.io.res.ResourcesImpl.getResource(ResourcesImpl.java:169)

at lucee.runtime.config.ConfigImpl.getResource(ConfigImpl.java:2331)

at lucee.runtime.config.ConfigWebUtil.getExistingResource(ConfigWebUtil.java:426)

at lucee.runtime.MappingImpl.initPhysical(MappingImpl.java:165)

at lucee.runtime.MappingImpl.getPhysical(MappingImpl.java:363)

at lucee.runtime.MappingImpl.check(MappingImpl.java:494)

at lucee.runtime.config.ConfigWebHelper.getApplicationMapping(ConfigWebHelper.java:167)

at lucee.runtime.config.ConfigWebImpl.getApplicationMapping(ConfigWebImpl.java:334)

at lucee.runtime.listener.AppListenerUtil.toMappings(AppListenerUtil.java:309)

at lucee.runtime.listener.AppListenerUtil.toMappings(AppListenerUtil.java:290)

at lucee.runtime.listener.ModernApplicationContext.getMappings(ModernApplicationContext.java:1032)

at lucee.runtime.listener.AppListenerUtil.toResourceExisting(AppListenerUtil.java:880)

at lucee.runtime.listener.AppListenerUtil.loadResources(AppListenerUtil.java:853)

at lucee.runtime.orm.ORMConfigurationImpl._load(ORMConfigurationImpl.java:142)

at lucee.runtime.orm.ORMConfigurationImpl.load(ORMConfigurationImpl.java:125)

at lucee.runtime.listener.AppListenerUtil.setORMConfiguration(AppListenerUtil.java:541)

at lucee.runtime.listener.ModernApplicationContext.reinitORM(ModernApplicationContext.java:434)

at lucee.runtime.listener.ModernApplicationContext.<init>(ModernApplicationContext.java:368)

at lucee.runtime.listener.ModernAppListener.initApplicationContext(ModernAppListener.java:460)

at lucee.runtime.listener.ModernAppListener._onRequest(ModernAppListener.java:118)

at lucee.runtime.listener.MixedAppListener.onRequest(MixedAppListener.java:44)

at lucee.runtime.PageContextImpl.execute(PageContextImpl.java:2494)

at lucee.runtime.PageContextImpl._execute(PageContextImpl.java:2479)

at lucee.runtime.PageContextImpl.executeCFML(PageContextImpl.java:2450)

at lucee.runtime.engine.Request.exe(Request.java:45)

at lucee.runtime.engine.CFMLEngineImpl._service(CFMLEngineImpl.java:1215)

at lucee.runtime.engine.CFMLEngineImpl.serviceCFML(CFMLEngineImpl.java:1161)

at lucee.loader.engine.CFMLEngineWrapper.serviceCFML(CFMLEngineWrapper.java:97)

at lucee.loader.servlet.CFMLServlet.service(CFMLServlet.java:51)

..."

I am using Lucee 5.4 - have tried 5.4.6.9, 5.4.5.23 and 5.4.3.38 with identical results.

After this NPE has been logged, attempting to access the s3 location using the mapping acts as though the mapping is not defined.

The fact that this code has worked for years for other buckets, suggested that the issue could be due to something on the AWS side… but I’m struggling to identify what it could be.

I have tested accessing the bucket path defined in the S3 mapping directly through the AWS CLI using the same AWS Key/Secret - and can read and write to the bucket as expected per the defined permissions.

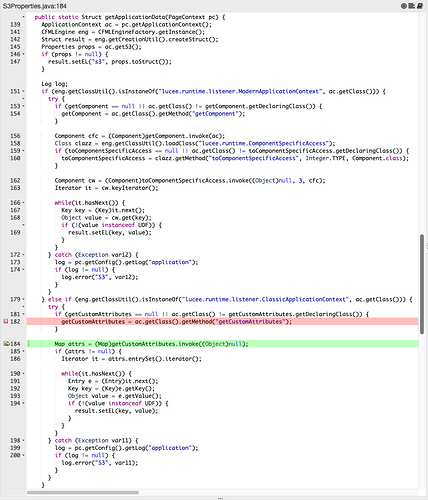

I am not a Java Expert… but to me, the location within the s3 extension where this NPE is generated is the following:

- org.lucee.extension.resource.s3.S3Properties.getApplicationData(S3Properties.java:184)

Map attrs = (Map)getCustomAttributes.invoke((Object)null);

Which is I believe attempting to call lucee.runtime.listener.ClassicApplicationContext.getCustomAttributes() through what looks like a mixin…

This code is running within the S3Properties public static Struct getApplicationData(PageContext pc) function - at a point where it looks to me like it’s trying to get the config values as defined in the application (presumably for defaults defined within Application.cfc etc) - and not at a point where it is looking specifically at anything specific to the s3 path defined in the mapping creation… which… leaves me struggling to comprehend how the issue could be anything do do with the AWS configuration of the bucket etc.

Is this something which anyone has encountered before… or anything for which a possible cause may be apparent… as I’m scratching my head here with on the one hand thinking it must be due to an error on our side in how we have configured the bucket (quite possible… but we have defined dozens of buckets in multiple Lucee applications in a similar way… and never run into this issue before…) - and on the other hand, the location and nature of the exception does not appear to be at a point where it’s even looking specifically at what we have defined, but rather just appears to be checking first for general config…

We have got this far in the investigation in order to be able to identify specifically where the trigger point is (not the easiest due to the s3 extension just catching and logging the error in java, without any context to the calling CFML, and with the failure effectively being silent to the calling application (no exception shown, just the mapping appears not to be defined) using FR production debugger.

Any assistance in identifying the cause would be appreciated.

OS: Linux (6.6.12-linuxkit) running in docker container from ortussolutions/commandbox:3.9.4

Java Version: 11.0.23 (tried others)

Commandbox Version: 6.0.0 (tried others)

Lucee Version: 5.4.6.9 (tried others)