all these changes are in 6.2.5.7-SNAPSHOT?

Doh, correcting ![]()

It’s working OK locally ![]()

We updated our live deployments with the suggested workaround connection pool settings, and are seeing less connections in use.

Hi, can you maybe share with us what “suggested” connection pool settings are you using?

Just for comparison.

Thank you.

There are plenty of articles out there about sizing connections pools, it’s not really lucee specific, it really depends on your server capacity, FAFO?

Hi, can you maybe share with us what “suggested” connection pool settings are you using?

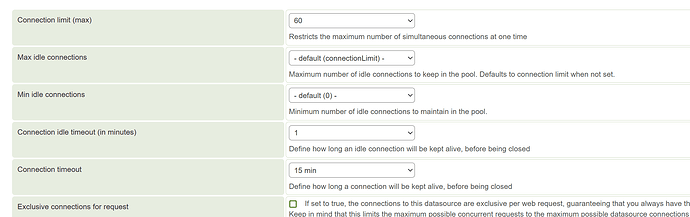

I was referring to the “partial workaround” in LDEV-5962 : Jira

We were using 100 as the connectionLimit, so have added matching maxTotal and a idleTimeout.

At that scale, there’s not been very much change in number of connections in absolute or percentage terms, at least on our production workloads (a typical short running (sub 1s) API call type application).

Our staging stack has seen a large uptick (~50%) in connections, but has lower traffic, so we’re probably seeing the pooling working better; but we’ve concurrently changed our health checks to include a database query (so if the pool locks up even for one application.name we replace the whole instance) to the picture is muddled there.

But, no ill effects so far.

So I’m running Lucee 6.2.3.35 in production, and I’ve been running into a phantom connections issue constantly, under heavy load.

I’ve been able to mitigate the issue somewhat, just by ensuring there’s no more than a 2:1 ratio of maxConnections/maxThreads configured in server.xml versus the available max database connections.

But I was never able to eliminate the occasional phantom connection from happening under load. This is a production application that receives somewhere around an average of 450 requests per second.

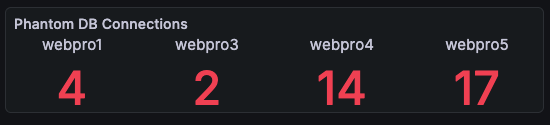

I have 5 big production servers, webpro1-5.

I patched webpro2 with 6.2.5.8 two days ago, and after two days, here’s the results of the phantom connections:

Servers 1,3,4,5 on Lucee 6.2.3.35 have phantom db connections that will only be cleared by a lucee restart.

Server 2, webpro2? That has 6.2.5.8 and this issue simply does not recur.

That’s a good enough negative test for me. I’m upgrading all the servers to 6.2.5.8 this weekend during the maintenance window, snapshot or no.

Figured that one out as well, fixed in the latest SNAPSHOT

https://luceeserver.atlassian.net/browse/LDEV-6051

I now have a new absolute beast of a desktop, a water cooled AMD 9950x3D with 16 cores / 32 threads, with 64Gb / 6000 / CL30 ram which really made it easier to brutally test this out. my old laptop only had 8 cores, which matched the default maxIdle from Apache commons pools enforced.

maxIdle now defaults to connectionLimit unless otherwise configured

I can try that - 6.2.5.19-SNAPSHOT-nginx right ?

yup

6.2.5.19 does not appear to change the number of database connections we see from before (6.2.5.7-SNAPSHOT-nginx) but it hasn’t had a long idle time like overnight yet.

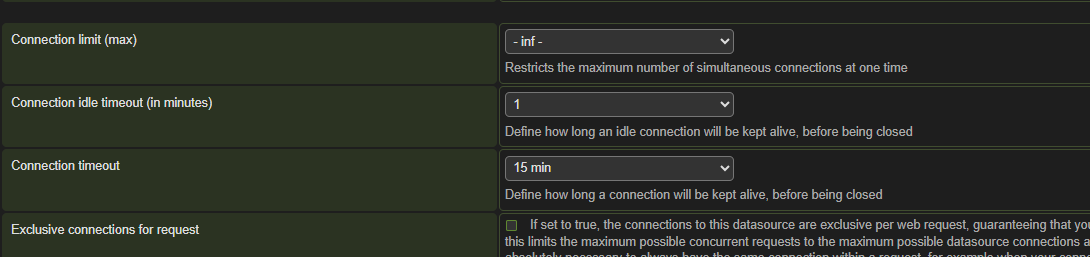

For completeness, we’re using the below, plus a class, connectionString, password and username in the struct for the DSN

, connectionLimit: 50

, maxTotal: 50

, idleTimeout: 1